What Is a VMware DRS Cluster?

A cluster is a group of hosts

connected to each other with special software that makes them elements of a

single system. At least two hosts (also called nodes) must be connected to create a cluster. When hosts are added to the

cluster, their resources become the cluster’s resources and are managed by the

cluster.

The most common types of VMware

vSphere clusters are High Availability (HA) and Distributed Resource Scheduler

(DRS) clusters. HA clusters are designed to provide high availability of

virtual machines and services running on them; if a host fails, they immediately

restart the virtual machines on another ESXi host. DRS clusters provide the

load balancing among ESXi hosts, and in today’s blog post, we are going to

explore the DRS cluster system in depth.

How

Does the DRS Cluster Work?

Distributed Resource scheduler (DRS)

is a type of VMware vSphere cluster that provides load balancing by migrating

VMs from a heavily loaded ESXi host to another host that has enough computing

resources, all while the VMs are still running. This approach is used to

prevent overloading of ESXi hosts. Virtual machines can have uneven workloads

at different times, and if an ESXi host is overloaded, performance of all VMs

running on that host is reduced. The VMware DRS cluster helps in this situation

by providing automatic VM migration.

For this reason, DRS is usually used

in addition to HA, combining failover with load balancing. In a case of

failover, the virtual machines are restarted by the HA on other ESXi hosts and

the DRS, being aware of the available computing resources, provides the recommendations

for VM placement. vMotion technology is used for this live migration of virtual

machines, which is transparent for users and applications.

When

Are DRS Clusters Used?

The DRS solution is usually used in

large VMware virtual environments with uneven workloads of VMs in order to

provide rational resource management. Using a combination of DRS and HA results

in a high-availability cluster with load balancing. The DRS is also useful for

automatic migration of VMs from an ESXi server put in maintenance mode by an

administrator. This mode must be turned on for the ESXi server to perform

maintenance operations such as firmware upgrades, installing security patches,

ESXi updates etc. There cannot be any virtual machines running on an ESXi

server entering maintenance mode.

DRS Clustering Features

Main DRS clustering features are

Load Balancing, Distributed Power Management, and Affinity Rules.

Load Balancing is the feature that optimizes the utilization of computing

resources (CPU and RAM). Utilization of processor and memory resources by each

VM, as well as the load level of each ESXi host within the cluster, is

continuously monitored. The DRS checks the resource demands of VMs and

determines whether there is a better host for the VM to be placed on. If there

is such host, the DRS makes a recommendation to migrate the VM in automatic or

manual mode, depending on your settings. The DRS generates these

recommendations every 5 minutes if they are necessary. The figure below

illustrates the DRS performing VM migration for load balancing purposes.

Distributed Power Management (DPM) is a power-saving feature that compares the capacity of

cluster resources to the resources utilized by VMs within the cluster. If there

are enough free resources in the cluster, then DPM recommends migrating the VMs

from lightly loaded ESXi hosts and powering off those hosts. If the cluster

needs more resources, wake-up packets are sent to power hosts back on. For this

to function, the ESXi servers must support one of the following power

management protocols: Wake-On-LAN (WOL), Hewlett-Packard Integrated Lights-Out

(iLO), or Intelligent Platform Management Interface (IPMI). With the DRS

cluster’s DPM, you can save up to 40% in electricity costs.

Affinity Rules allow you some control over placement of VMs on hosts.

There are two types of rules that allow keeping VMs together or separated:

- affinity or anti-affinity rules between individual VMs.

- affinity or anti-affinity rules between groups of VMs and groups of ESXi hosts.

Let’s explore how these rules work

with examples.

1. Suppose you have a database

server running on one VM, a web server running on a second VM, and an

application server running on a third VM. Because these servers interact with

each other, three VMs would ideally be kept together on one ESXi host to

prevent overloading the network. In this case, we would select the “Keep

Virtual Machines Together” (affinity) option.

2. If you have an application-level

cluster deployed within VMs in a DRS cluster, you may want to ensure the

appropriate level of redundancy for the application-level cluster (this

provides additional availability). In this case, you could create an

anti-affinity rule and select the “Separate Virtual Machines” option.

Similarly, you can use this approach when one VM is a main domain controller

and the second is a replica of that domain controller (Active Directory level

replication is used for domain controllers). If the ESXi host with the main

domain controller VM fails, users can connect to the replicated domain

controller VM, as long as the latter is running on a separate ESXi host.

3. An affinity rule between a VM and

an ESXi host might be set, in particular, for licensing reasons. As you know,

in a VMware DRS cluster, the virtual machines can migrate between hosts. Many

software licensing policies – such as database software, for example – require

you to buy a license for all hosts on which the software runs, even if there is

only one VM running the software within the cluster. Thus, you should prevent

such VM from migrating to different hosts and costing you more licenses. You

can accomplish this by applying an affinity rule: the VM with database software

must run only on the selected host for which you have a license. In this

case, you should select the “Virtual Machines to Hosts” option. Choose “Must

Run on Host” and then input the host with the license. (Alternatively, you

could select “Must Not Run on Hosts in Group” and specify all unlicensed

hosts.)

You can see how to set affinity

rules in the setup section below.

Requirements

for Setting Up a DRS Cluster

The following requirements must be

met to set up a DRS cluster:

- CPU compatibility. The maximum compatibility of processors between ESXi hosts is required. Processors must be produced by the same vendor and belong to the same family with equivalent instructions sets. Ideally, the same processor model should be used for all ESXi hosts.

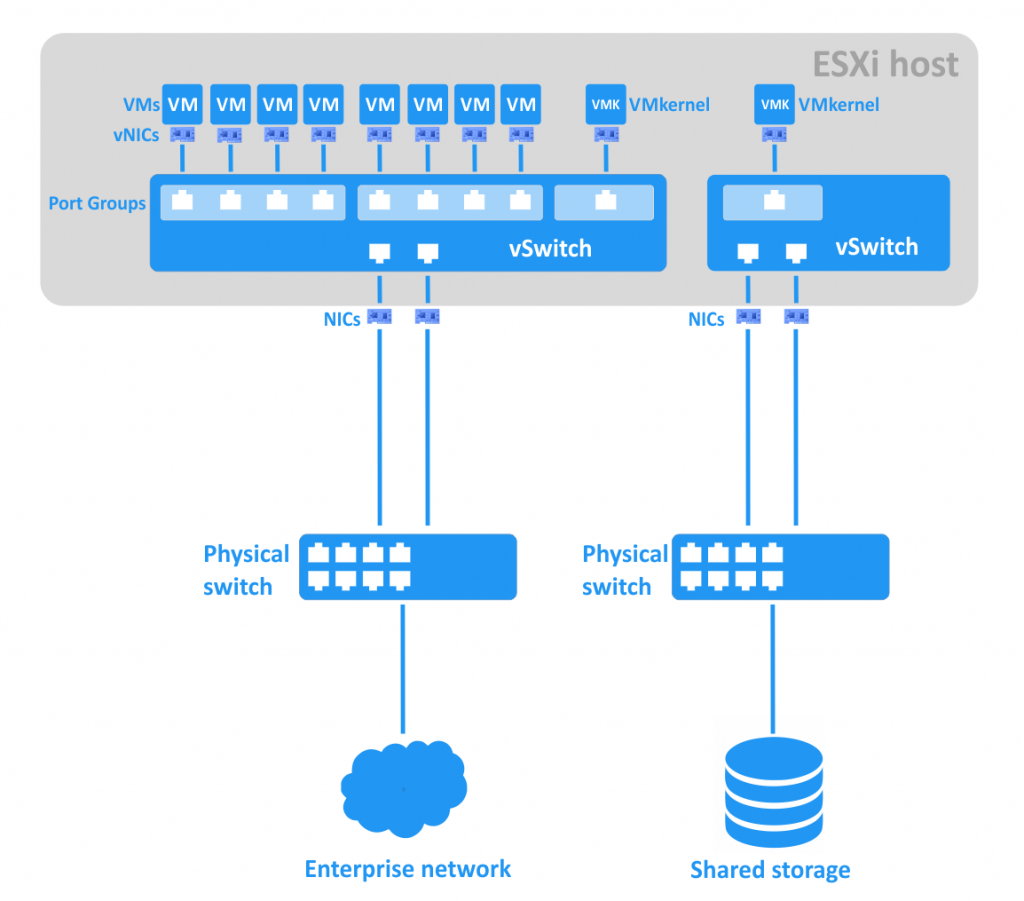

- Shared datastore. All ESXi hosts must be connected to shared storage such as SAN (Storage Area Network) or NAS (Network Attached Storage) that can access shared VMFS volumes.

- Network connection. All ESXi hosts must be connected to each other. Ideally, you would have a separate vMotion network, with at least 1Gbit of bandwidth, for VM migration between hosts.

- vCenter Server must be deployed to manage and configure the cluster.

- At least 2 ESXi servers must be installed and configured (3 or more ESXi servers are recommended).

How to Set Up the DRS Cluster

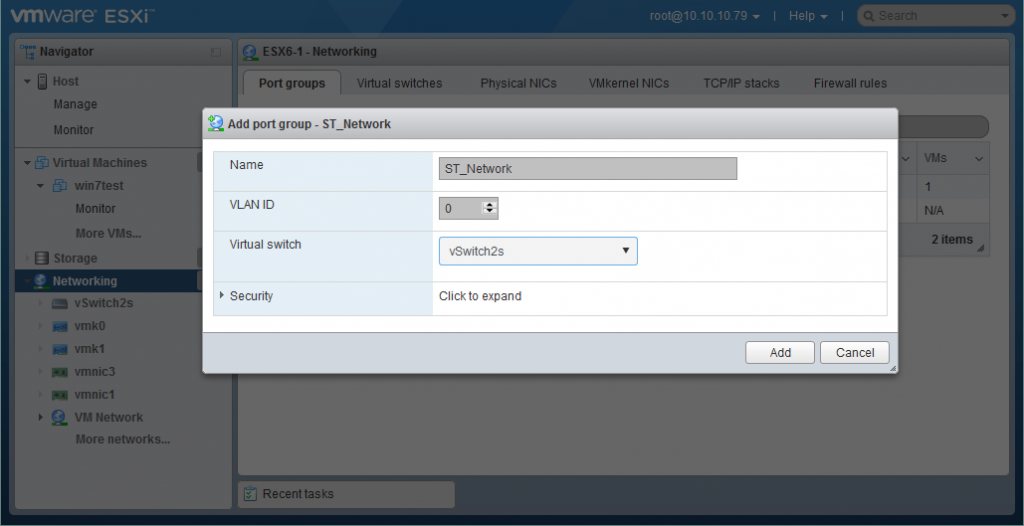

First, you need to

configure the ESXi hosts, network

connection, shared storage, and vCenter server. After configuring those, you

can set up your DRS cluster. Log in to vCenter server with the vSphere web

client. Create a datacenter in which to place your ESXi hosts: vCenter ->

Datacenters -> New Datacenter. Then, select your datacenter and click

Actions -> Add Host to add the ESXi hosts you need, following the

recommendations of the wizard. Now you are ready to create a cluster.

In order to create a cluster, do the

following:

- Go to vCenter -> Hosts and Clusters.

- Right-click on your datacenter and select “New Cluster”.

- Set the name of the cluster and check the box marked “Turn on DRS”. Click “OK” to finish.

If you have already created a

cluster, follow these steps:

- Go to vCenter -> Clusters -> Your cluster name.

- Open Manage -> Settings tab.

- Select “vSphere DRS” and click “Edit”.

- Check the box marked “Turn ON vSphere DRS”. Click “OK” to finish.

Now that you have created the DRS

cluster, you can configure DRS automation, DPM, affinity rules, and other

options.

DRS automation. In order to set up the load balancing, you need the “DRS Automation”

section. Here, you can select the Automation Level (Manual, Partially

Automated, or Fully Automated), as well as the Migration Threshold (values from

1 to 5, with 1 being conservative and 5 being aggressive). If you want to set

up individual virtual machine automation levels, then check the appropriate

box.

Power Management. You can set up DPM by selecting one of the following

values: Off, Manual, or Automatic. As with the load balancing feature described

above, you can select the DPM threshold values from 1 (conservative) to 5

(aggressive).

Advanced Options. You can manually set the advanced options for detailed

tuning of your cluster.

For example, you can set

“MinImbalance 40” for computing target imbalance. The default value is 50,

while 0 is the most aggressive. You can read more about this and explore all

the advanced options in the VMware documentation.

Affinity Rules. In order to set up affinity and anti-affinity rules,

follow these steps:

1. Go to vCenter -> Clusters

-> your cluster name

2. Go to Manage -> Settings tab

3. Select “DRS Rules” and click “Add”Set a name for the rule

4. Select the rule type:

2. Go to Manage -> Settings tab

3. Select “DRS Rules” and click “Add”Set a name for the rule

4. Select the rule type:

- Keep Virtual Machines Together (affinity)

- Separate Virtual Machines (anti-affinity)

- Virtual Machines to Hosts (affinity or anti-affinity)

5. Select VMs for the first two rule

types, or VM groups, host groups and policy for the third rule type

6. Click “OK” to finish.

6. Click “OK” to finish.

Resource Pools. If you would like to create a resource pool for your VMs

in a cluster, do the following:

- Go to vCenter -> Clusters -> Your cluster name.

- Click Actions -> New Resource Pool.

- Give the pool a name, then define limits and reservations for CPU as well as memory. Click “OK” when done.

Now you can add your virtual

machines to the resource pool. Here is how you can migrate an existing VM to

the resource pool:

- Go to vCenter -> Virtual Machines.

- Select your virtual machine.

- Click Actions -> Migrate. The wizard window appears.

- Select “Change Host” in the “Migration Type” section and click “Next”.

- Select your resource pool in the “Select Destination Resource” section and click “Next”.

- In the “Review Selections” section, click “Finish”.

After configuration, you can check

the state of your newly created DRS cluster. Just go to vCenter -> Clusters

-> Your cluster name and click the “Summary” tab.

The Advantages of Using DRS

The main advantage of using a VMware

DRS cluster is effective resource management with load balancing. This improves

the quality of services provided, while also allowing you to save power (and,

thus, money) with DPM. You can control the virtual machine placement manually

or automatically, which makes maintenance and support more convenient.